Charlie Munger is the first lawyer I knew who carefully studied “standard thinking errors.” He talked about them in his famous speech on The Psychology of Human Misjudgment.

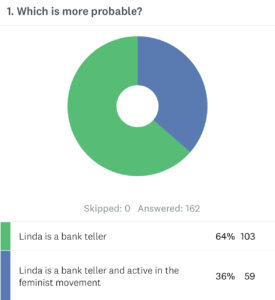

My last post offered a well-known test of one type of thinking error known as the conjunction fallacy. As I write this, 162 people had taken the test and 36% fell prey to the conjunction fallacy.

When the original test was given (obviously in a more rigorous setting), more people were supposedly fooled.

Confidence can be dangerous

Merely being aware of the existence of so-called thinking errors is not enough to prevent oneself from falling prey to them in the real world.

In other words, it’s one thing to know about the Linda problem and “get the answer right” (as many people who took the test in my last email did). But it’s another to face a real world scenario and avoid making the common thinking error.

How can we avoid error?

Munger advises becoming familiar with as many thinking errors as possible. If you want to follow his advice, start with this excellent book:

- Thinking, Fast and Slow, by Daniel Kahneman (a Nobel prize-winning scientist and the co-author of the aforementioned “Linda test”)

Kahneman’s book drives home that it’s easier to recognize other people’s mistakes than our own. This is a big reason we tend to be overly confident in our opinions.

Or as the eminently clear thinker Bertrand Russell once observed…

“The fundamental cause of the trouble in the modern world today is that the stupid are cocksure while the intelligent are full of doubt.”

Important takeway: People who believe they’re immune from thinking errors, are more likely to make mistakes.

Building Immunity Takes Time

If you want to immunize yourself from thinking errors you need to do more than read about them and memorize a list.

You need to assimilate and internalize, and this takes time. You need reps out there in the real world, where the proverbial rubber meets the road.

It’s important to realize there are many types of thinking errors. And, as Munger points out, they frequently meld together —so you’ll often be dealing with more than one at a time.

For example, in addition to the conjunction fallacy in the Linda problem there might other cognitive biases might be coming into play.

Stories can lead you astray

The narrative fallacy is also possibly why people get tripped up by the Linda problem.

The narrative fallacy is about our tendency to create stories with simplistic cause-and-effect explanations for otherwise random details and events. In short, our brains are wired to create all kinds of reflexive stories.

So let’s re-read this passage again

Linda is 31 years old, single, outspoken, and bright. She majored in Philosophy. As a student, she was deeply concerned with issues of discrimination and social justice, and she also participated in anti-nuclear demonstrations.

After many people read that passage, they’re itching to use that information to create a short story. Or to find one in a short sentence this this one.

“Linda is a bank teller and active in the feminist movement”

Why not jump to the conclusion that Linda is a feminist after reading all that preamble?

And is there an answer that allows us to do that? Why, yes there is. So let’s pick that one.

Short Stories are Fun

Fun fact: we’re capable of fabricating interesting stories that are also incredibly short. In fact, Hemingway famously claimed the passage below was the greatest short story ever written.

Note that it has only six words.

For Sale.

Baby shoes.

Never worn.

The first four words aren’t enough for a story. But add those last two words, and voilà…

Your brain instantly fabricates reasons why the shoes were never worn (e.g. the baby died before it could wear them etc.)

Why we like stories so much

We tend to lapse into the narrative fallacy because our brains have a primitive need to make sense of the uncertain and often unpredictable world.

We can’t eliminate uncertainty, but we definitely can do a better job of dealing with it.

The Simplistic Binary World

We shouldn’t deal with uncertainty by oversimplifying. Too many people default to simplistic binary thinking (e.g. characterizing things in terms of right and wrong, or good and bad).

To avoid falling prey to simplistic binary thinking, I recommend reading:

Duke’s book will help you learn to think in terms of probabilities when evaluating outcomes, which will help you make better decisions. By the way, her book is not only fascinating, but also exceptionally well written.

And, if you haven’t done so already, subscribe to her Substack newsletter, which is also called Thinking In Bets.

(In fact, many of my Substack newsletter subscribers were referred to me by her public recommendation of my newsletter. Thanks Annie!)

Okay, let’s end with one last cognitive glitch…

Beware of Availability Bias

The ease with which we remember something often leads us to misjudge the probability of an event happening.

Let me give you a real-world example.

In April 2018, a Southwest Airlines passenger was killed when she was partially sucked out of the cabin at 30,000 feet. She was sitting in row 14, next to the window, where debris stuck the plane.

Shortly afterward, I was taking with a well-educated, intelligent woman who has travels extensively around the world and owns homes in several cities.

Upon hearing about the Southwest accident she exclaimed that she would “never sit in the window seat of a plane again.”

To her this was a perfectly sensible conclusion. But, obviously, the probabilities of having the same misfortune as the poor woman on the Southwest flight would be minuscule. So why make such a ludicrous statement?

Because the recent news event was sensational and easily came to her mind.

Think Differently

Is this a good way to make decisions? Definitely not.

And yet well-educated, intelligent people fall prey to some form of availability bias all the time. One solution for avoiding this problem is to pay as little attention to sensational “news” as possible.

Another trick is to remind yourself of Daniel Kahneman’s insight that “nothing is as important as you think it is whileyou’re thinking about it.”

Reason From First Principles

Finally, consider what Nobel prize-winning scientist Richard Feynman famously said:

“The first principle is not to fool yourself – and you are the easiest person to fool.”

The first principle to realize and internalize is this: the human brain is hard-wired to make predictable misjudgments in many situations.

Conclusion

If you don’t know about those misjudgments, you’re more likely to fall prey to them. Of course, learning about these misjudgments is no picnic. In fact, if you try you’ll probably feel uncomfortable at times.

But if knowledge was easy to acquire…well, think about it.

P.S. If you want my Ultimate Guide to Technology for Lawyers click here.